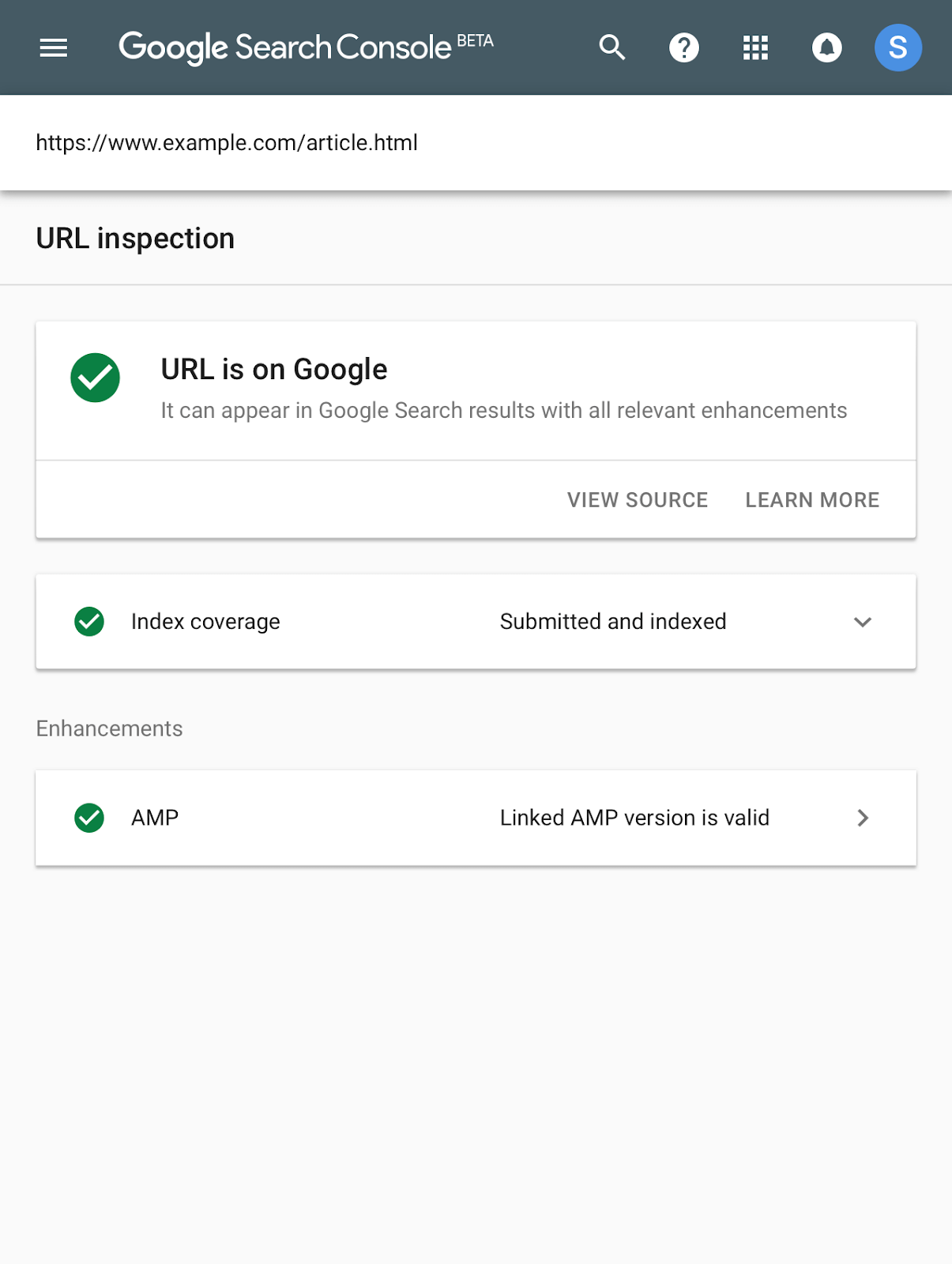

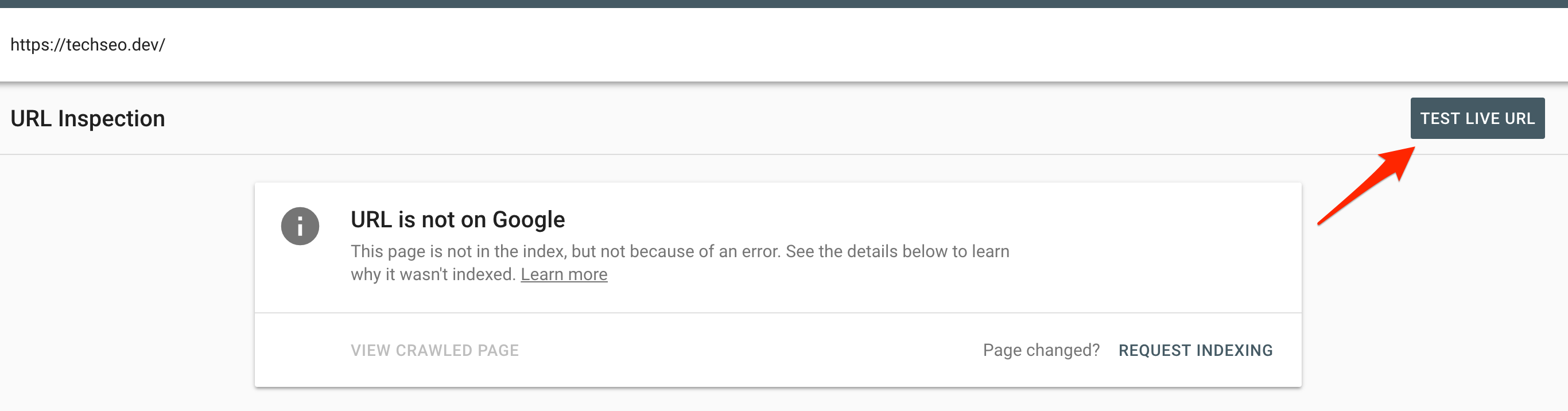

Last year Google launched the beta of the new Google Search Console, but when it first launched it was pretty empty. By June they had added one of the features I now use most often in it, the URL Inspection tool. It allows you to quickly see details as to how Google’s crawling, indexing and serving a page, request that Google pulls a ‘live’ version of the page and request that it’s (re)indexed:

The Live Inspection Tool will soon replace the ‘fetch as Google’ functionality found in the old Search Console and so it’s worth considering how moving to the new version might limit us.

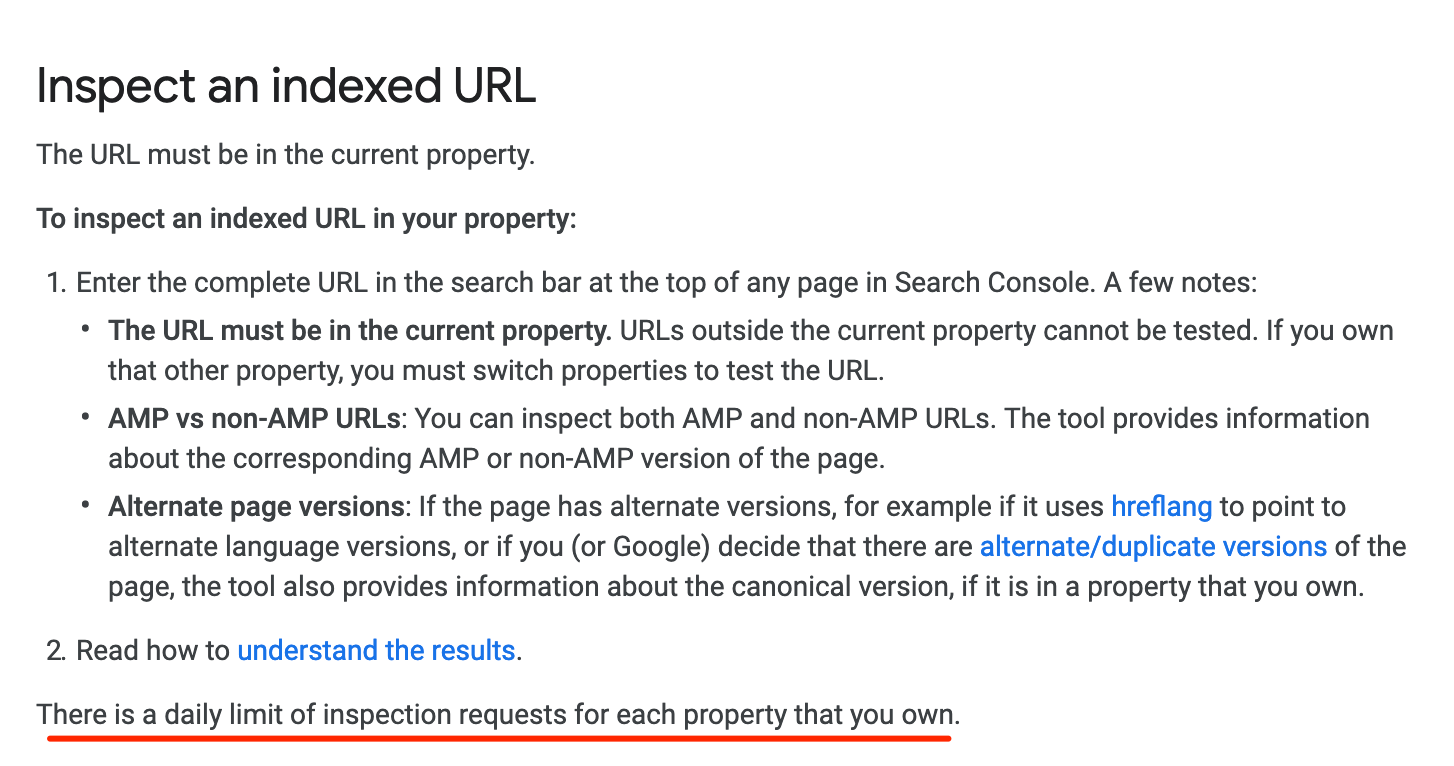

The old fetch and render used to be limited to 10 fetches a month – and had a clear label on it allowing the user to know exactly how many fetches they had remaining. This label disappeared in February last year, but the actual limit remained:

Yes, there are still limits. I’d really aim to use the more scalable approaches (like having a crawlable site, sitemaps, etc) instead of trying to fudge-force indexing manually.

– John (@JohnMu) February 13, 2018

Since the Live Inspection Tool is far more about understanding and fixing problems with a page than the old ‘fetch as Google’ tool – which I, at least, mostly used to force a page to be indexed/re-crawled – it makes sense for the Live Inspection Tool to have a higher limit. Yet there’s no limit listed within the new tool. We turned to Google’s documentation and, honestly it could be more helpful:

So, dear readers, we decided to put the Live Inspection Tool to the test with a methodology that can only describe as ‘basic’.

Methodology: We repeatedly mashed this button:

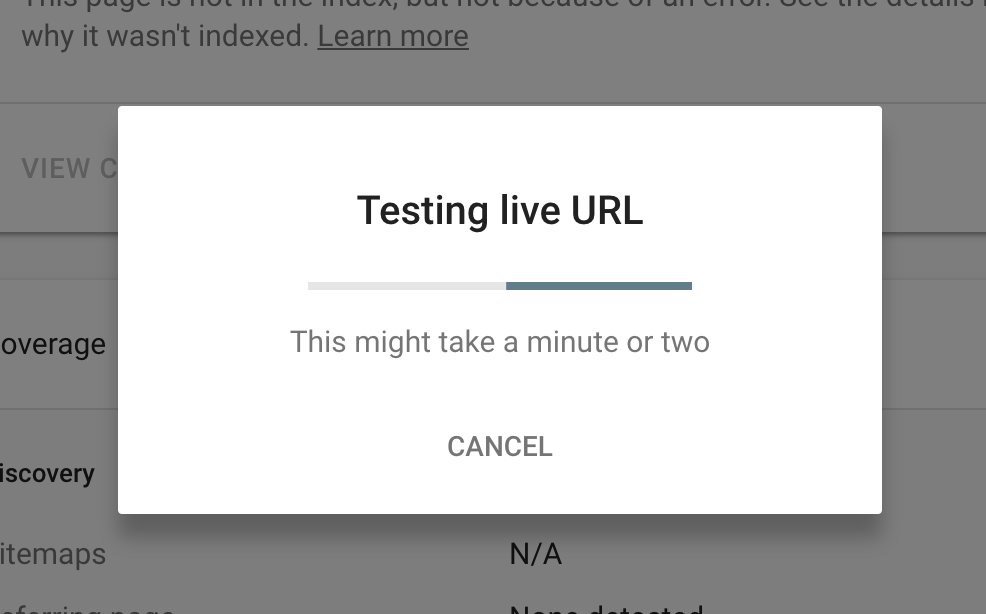

..until Google stopped showing us this:

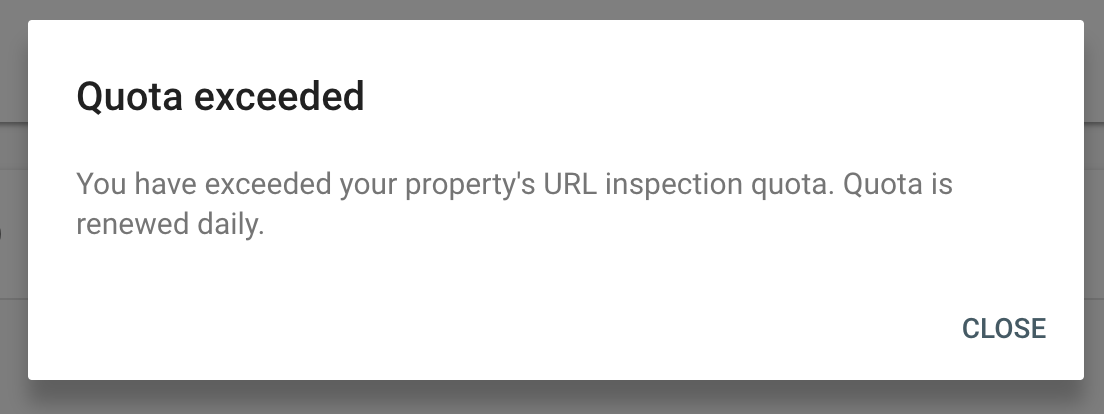

We quickly sailed past 10 attempts without a problem, on to 20, then 30. At 40 we wondered if there really was no limit, but, just as we were about to lose hope, on the 50th try:

tl:dr: The daily limit for Live URL Inspection is 50 requests.

How is knowing this actually useful?

Basic

If you’re planning a domain migration, you can add in to your migration plan a step to pick out your 50 most important URLs and manually request indexing on those pages on the day of the migration.

Intermediate

Taking that a step earlier, you could take the 100 most important pages and, once the redirects are in place, request indexing of 50 of the old URLs, through the old domain’s Search Console property, to pick up the redirects, whilst requesting indexing of the remaining 50 through the new domain’s Search Console property to quickly get those pages in the index.

Advanced

This is the ‘let’s try to break it’ option. 50 URLs is nowhere near Bing’s 10k URLs a day, but what if you could actually end up with more than 10k indexed through this technique?

Remember that you can register multiple properties for the same site. As a result there’s an interesting solution where you automatically register Search Console properties for each major folder on your site (up to Search Console’s limit of 400 in a single account) and then use the Live Inspection tool for 50 URLs per property – giving you up to 20k URLs a day – double Bing’s allowance! None of this would be particularly difficult using Selenium/Puppeteer; we’ve previously built out scripts to automatically mass-register Search Console properties for a client that was undergoing a HTTPS migration and had a couple of hundred properties they needed to move over, which went without a hitch. We didn’t use that script to mass request indexing, but, if you did, it could allow for a migration to occur extremely quickly. We don’t recommend doing this – I can’t imagine this is how Google wants you to use this tool, though equally I can’t think how they’d actively penalise you for doing this. Something, perhaps, to try out another day at your own risk. If you do, let me know how it works!